Many organizations are migrating workloads to Azure Landing Zones (LZs). A key principle in that model is that each development team will own their LZs. Microsoft’s “Cloud Adoption Framework” (CAF) helps guide architects create the high-level designs while the “Well Architected Framework” (WAF) is a more detailed guide to migrate workloads with some great design principles. None of them however, are detailed enough on their own to help LZ owners set up traffic paths and flows in a secure manner.

The thing with Azure is that it is “working by design” meaning there are standard rulesets and configuration in place to “just make things work”. This helps everyone get workloads working fast. But securing them requires work and knowledge.

In the frameworks, (CAF WAF) Microsoft advise to use private endpoints where possible, use NSG’s everywhere, set up a web application firewall and control the flow of traffic with route tables. As guiding principles this sounds easy enough, however when actually trying to design the flow of traffic within a LZ it quickly becomes complex. This post will try to help guide the process towards a more secure setup.

Virtual Networks (vNets)

A virtual network in Azure is really just a placeholder to reserve IP space. To actually deploy network interfaces and have any traffic you need to provision subnets. All traffic generation in a vNet must originate from a subnet. Filtering and routing all happens within the subnet. What vNets essentially are is a collection of subnets that can share peerings to other vNets and share DNS configuration which is both configured on the vNet level.

Network Security Groups (NSGs)

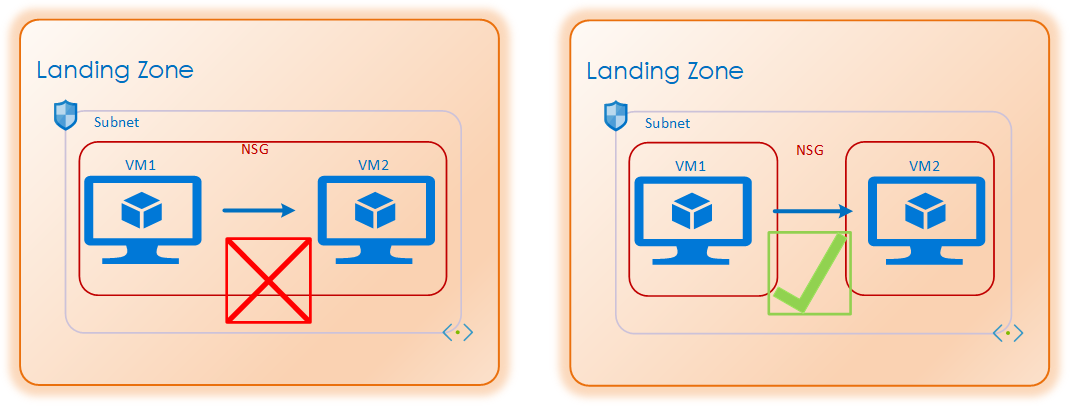

The current standard is an NSG surrounding each subnet. This is the best practice and each subnet should always have its own unique NSG. On the surface NSG’s may look simple, a classic access list one might think. But that is not the case. The NSG’s are a L3/L4 filter, but rather than filtering only traffic in and out of a subnet, it will filter all traffic within the subnet as well. Imagine a NSG around each NIC within the subnet.

This means that for traffic between two hosts within a single subnet there is a need for both an outbound and an inbound rule to allow the traffic flow.

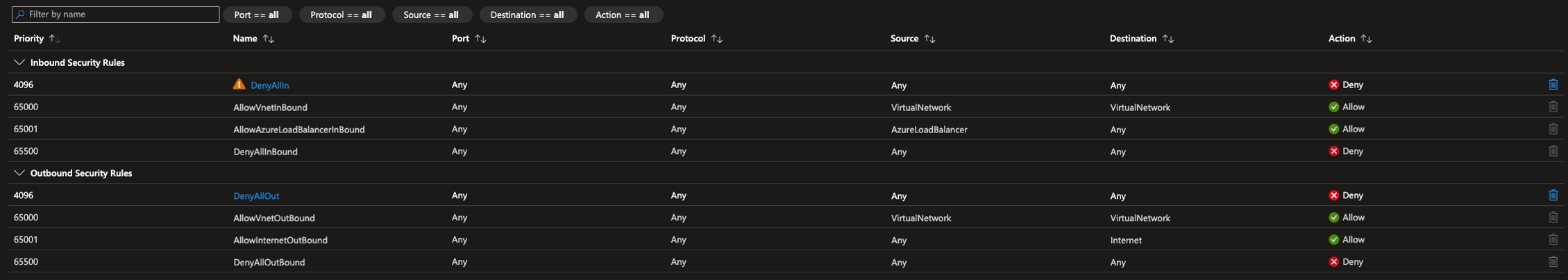

By default, Microsoft has set up some default rules.

These rules will allow all traffic on all ports within the vNet, this is great for just making things work. But it breaks all rules of micro-segmentation and Zero-Trust.

Microsoft on “What is an example of Zero Trust Network”: “A Zero Trust network fully authenticates, authorizes, and encrypts every access request, applies microsegmentation and least-privilege access principles to minimize lateral movement, and uses intelligence and analytics to detect and respond to anomalies in real time.” https://www.microsoft.com/en-us/security/business/zero-trust

The Zero-Trust framework, principle of least privilege and micro-segmentation all state that access should only be granted where needed. All openings in NSG’s should be explicit and for a reason. The best practice for NSG’s is to negate the default rules with a deny any-any rule both outbound and inbound with a priority as high as possible, all openings should be intentional.

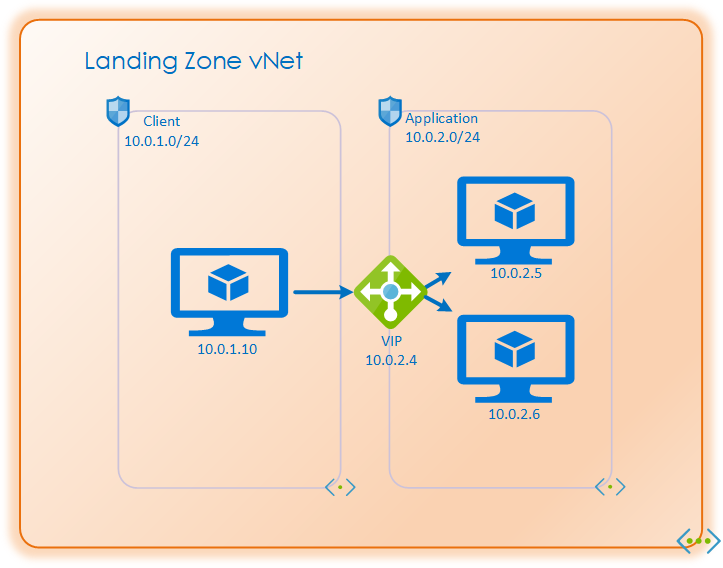

This will create some challenges when it comes to native functionality of load-balancers and such which relies upon these rules. But there are still ways to make it work without compromising security. The important thing to understand is that Azure Load balancers does Network Address Translation (NAT) before the traffic is filtered by the NSG. So inbound rules where traffic passes through a load balancer must have all members of the backend-pool as allowed destinations.

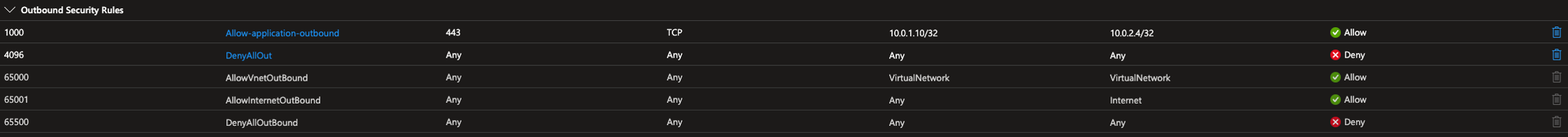

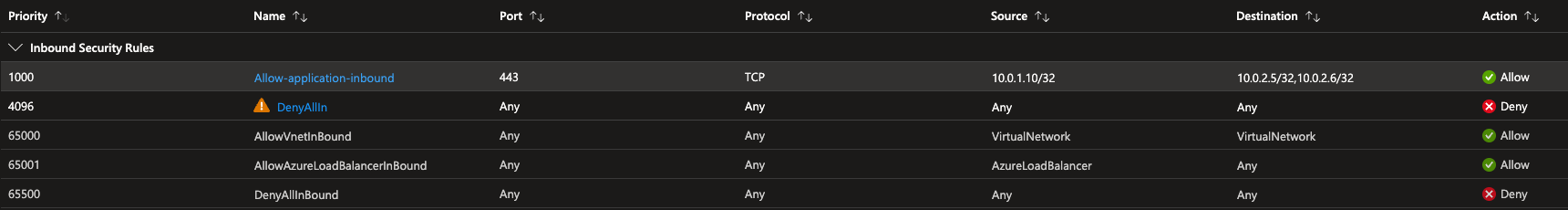

In the illustration above the Client NSG would need:

Outbound Rule

While the Application NSG would need:

Inbound rule

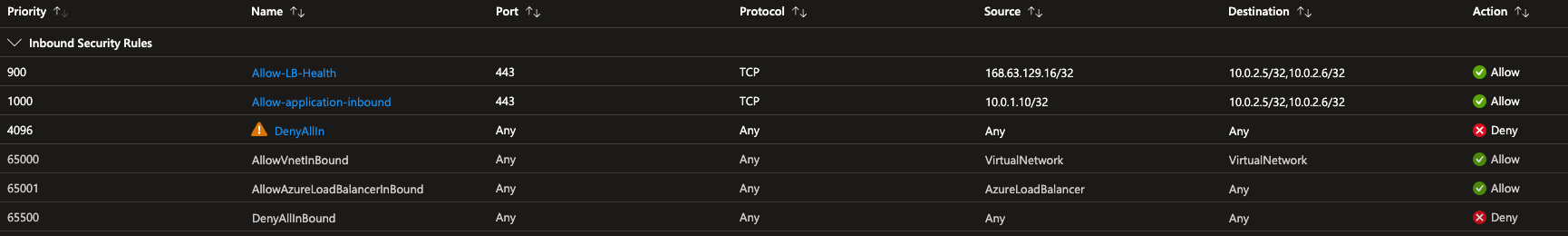

In addition the Application NSG would also need to allow inbound health-probes from the load-balancer. Those probes will originate from 168.63.129.16 with the port chosen for the health-probe. https://learn.microsoft.com/en-us/azure/load-balancer/load-balancer-custom-probe-overview

Resulting like the picture above

Resulting like the picture above

Web Application Firewall (WAF)

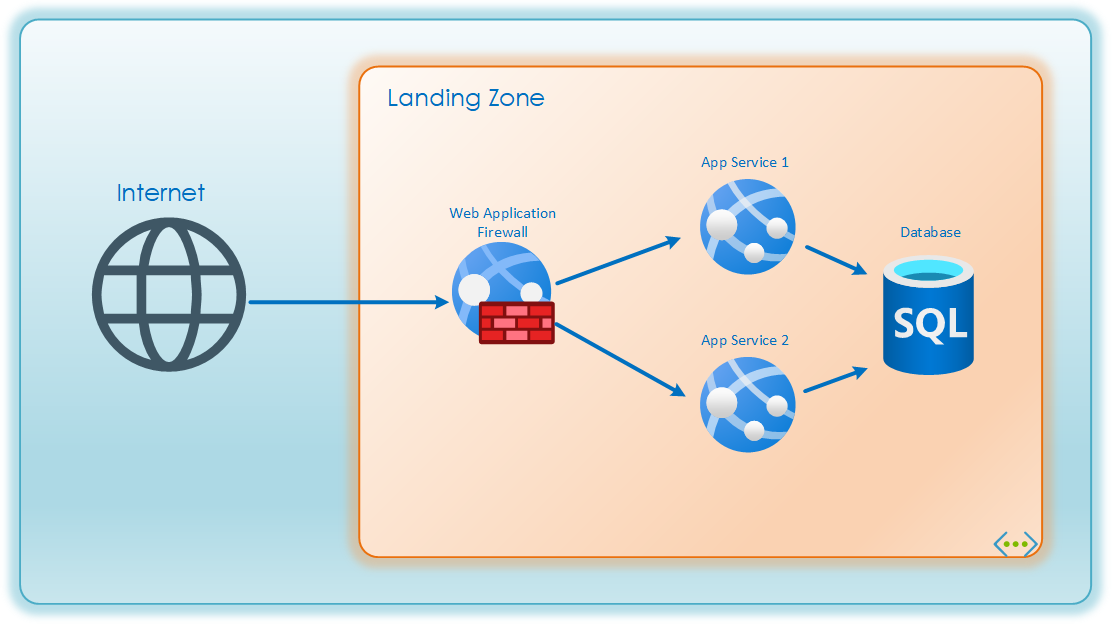

Azure Application Gateway WAF can be added in front of applications. The WAF decrypts and inspect the user traffic, logs suspicious activity, and block attempts to compromise the applications. Most companies require a WAF solution in place at least in front of their public facing web-applications, but more and more also use it for internal use as well. A lot of regulatory standards requires a WAF solution. The WAF will Source-NAT the traffic so that the applications can restrict network access to their endpoints. This post will not dive deeper into the WAF technology, but suffice to say it is a field of study on its own and there are a lot of different products available in addition to Azure WAF.

Private Endpoints (PEs)

Microsoft PaaS services such as Azure Web Apps, Azure SQL DB, and Storage accounts are by default public facing towards internet. To lock it down to your local network with a private IP you need to configure a private endpoint for the service. Note that private endpoints can only ever receive traffic. There is no way to generate outbound traffic from a private endpoint.

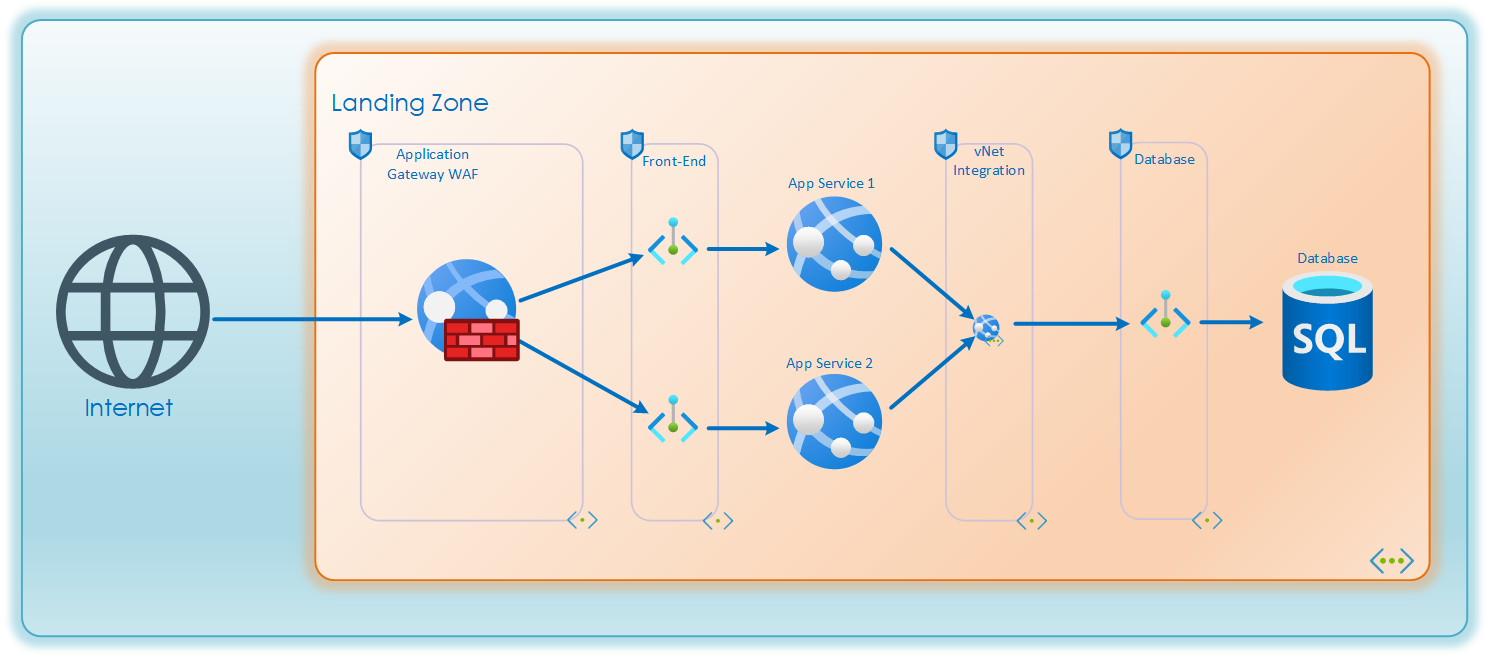

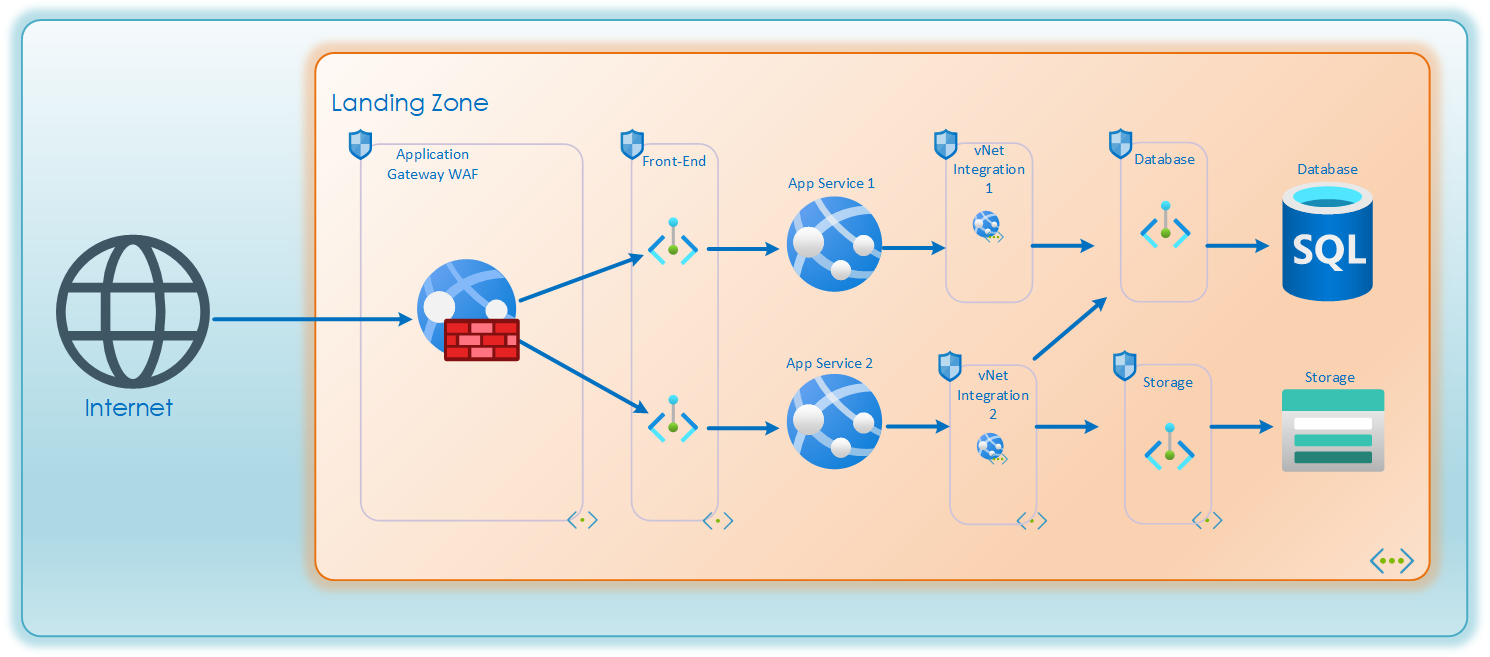

As an example, a distributed application migrated to be hosted on two Azure Web Application Services. This gives the App Services a private listening IP where the application can be reached over local network. Public access on the App Services directly can now be disabled and the only way to reach the application should be through the private endpoints.

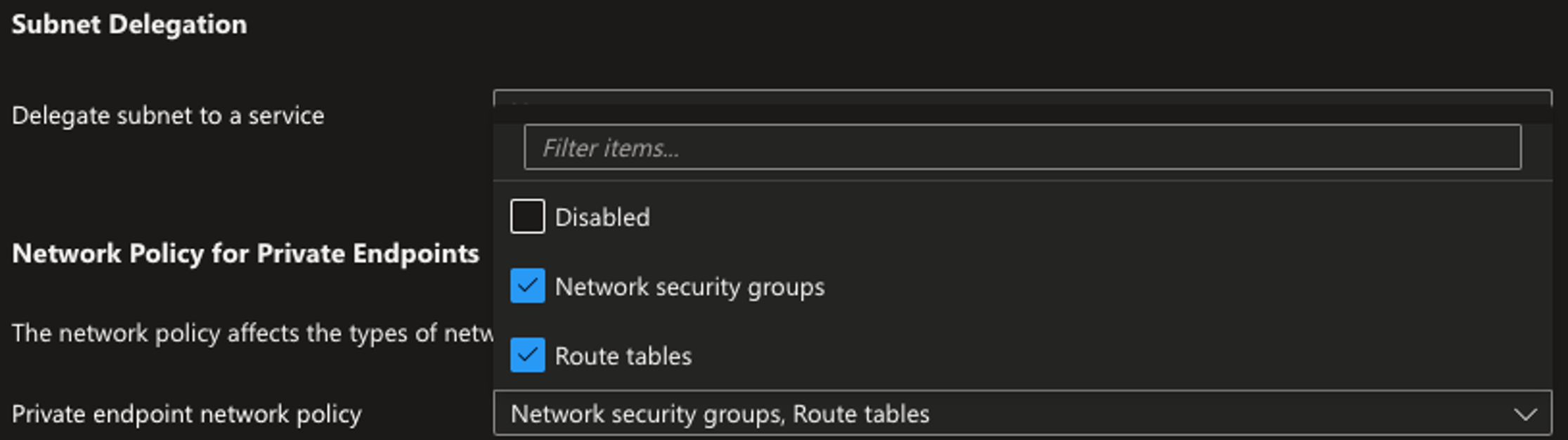

Note that for propagated routing and NSG’s to be enabled for private endpoints it must be enforced on the subnet.

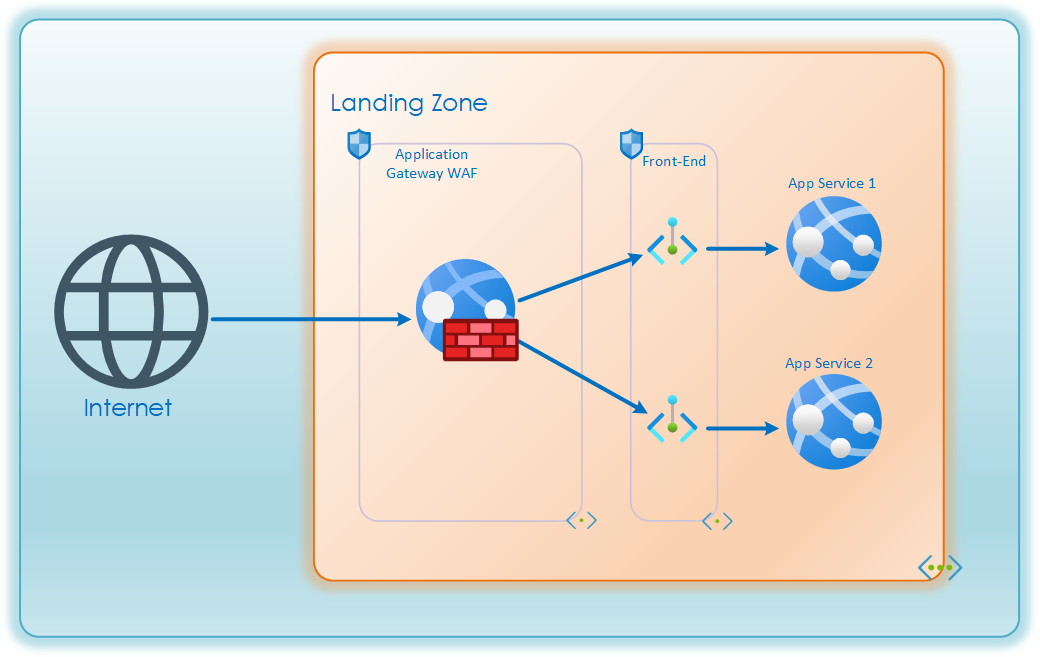

Microsoft’s Well Architected Framework states that all PaaS services should use private endpoints. It does however not state how those private endpoints should be placed in regards to subnet. Best practice is to keep the applications as segmented as possible, but since each private endpoint holds a unique private IP, it’s possible limit access to through the NSGs. It is common to group together at least web-applications within the same level on the same subnet. So that all front-end facing applications can share a subnet, but not share it with backend applications or solutions such as storage or database.

Virtual Network Integration (vNet Integration)

Most applications require connectivity towards databases, storage accounts etc. To facilitate this Microsoft has added the vNet Integration feature to allow PaaS products to make outbound requests on the local virtual network.

The vNet integration functions by choosing a random IP from the integration subnet to source its requests from. This is great for remediating source-NAT port-exhaustion. But it also generates a security challenge. This means that for all apps which share an integration subnet there is no way for any NSG, firewall or other network device do discern which app is generating the request. For enterprises with strict network access rules, opening up critical databases or archive solutions to all applications in a LZ will not be allowed. In such cases it is advisable to designate one integration subnet per application to be able to filter and control traffic from each app.

This is the basis of designing traffic paths within a Landing Zone. I hope you have learned something from this. Landing Zones often have integrations and dependencies outside its own LZ. Further in the blog-series we will dig into securing that traffic as well.